How Human Intuition Improves by Using Optimizers: Learnings from DE Shaw

Cameron Hight shares his thoughts on DE Shaw’s “Machine Teaching: What I Learned From My Optimizer”.

From DE Shaw’s “Machine Teaching: What I Learned From My Optimizer”:

"A question we’re occasionally asked is why we use optimizers in discretionary investing at all.

One reason we find it valuable is that, through their objectivity and computational power, optimizers help human traders think through the multilayered implications of their forecasts and positions.

Optimizers demand inputs. They require traders to quantify their beliefs and assumptions and to analyze each component of their forecast individually."

YES! Optimizers are not 'optimal', but they are critical collaborators for humans. They require explicit, quantified inputs. They work better when forecasts are broken into their components (reward, risk, and probabilities of each).

"Imagine a portfolio manager has a new trade idea and an intuition about what size it should hold in their portfolio. They add the trade’s forecast and other parameters to their optimizer. If the optimizer’s suggested trade size diverges meaningfully from the manager’s initial intuition, what does that mean, and what should they do next? The answer is likely to lie somewhere between “do exactly what the optimizer says” and “ignore the optimizer.”

In this situation, assuming the optimizer’s design is sound, a starting framework might be to assume that the gap between intuition and optimizer can be explained by some combination of (a) inaccurate inputs and (b) biased or incomplete intuition. In other words, the manager might ask: Why does the optimizer’s desired trade size differ so meaningfully from what I expected? Did I miss something fundamental when estimating my parameters? If not, what is the optimizer’s output telling me about my own assumptions, judgment, and possible biases?

With these questions in mind, the manager may want to iterate with the optimizer to identify variables to which the suggested position size is most sensitive, and then challenge those inputs. How confident are they in their estimates, and if their error bars are wide, how can they communicate that to the optimizer? Are there variables they’re implicitly considering that aren’t reflected in the optimization? Is the optimizer aware of a relevant variable that they’ve overlooked?

They might also find it helpful to work backward, finding sets of inputs that yield a suggested position size from the optimizer that matches their intuition. Are those inputs even plausible? Iterating with an optimizer in this way can challenge the manager’s assumptions, and hopefully sharpen their judgment."

The above may be the best description of how managers should utilize Alpha Theory in their day-to-day operations. When Alpha Theory’s suggested position size doesn’t match your intuition, there are three questions to ask: 1) Are my assumptions wrong? 2) Is there something that Alpha Theory is not considering? 3) Is my intuition wrong? What an amazing way to describe how a manager should interact with Alpha Theory.

"The observations we share are the result of years of iterative interaction between human and machine—machine teaching. At the heart of building an optimizer, and using and learning from it, is iterative interaction.

While the science of optimization is heavily grounded in math, the art of optimization—improving design, inputs, and hopefully outputs—comes from confronting it with human intuition, and vice versa. It’s this iterative process that provides learning opportunities for the human practitioner."

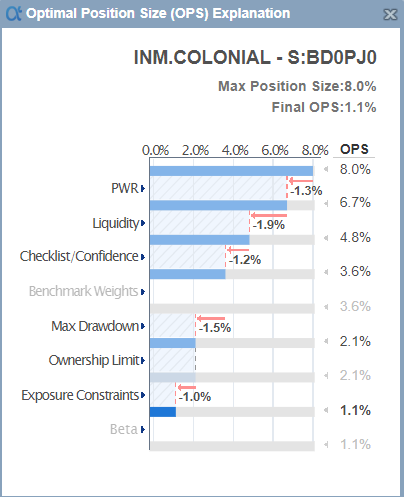

Alpha Theory is not a traditional optimizer, it is a rules engine. The belief is that discretionary managers are highly skilled at forecasting and knowing what matters when sizing a position. Their skill deficiency lies in translating their forecasting and position-sizing knowledge into a portfolio. To better connect with a manager’s intuition, Alpha Theory is skewed toward the 'art' side of optimization. A set of human intuition-based portfolio rules and human-generated research inputs go in and the output is a set of position sizes. We’ve even built an explainer tool to show the portfolio manager how the math was performed to deeply connect the output with intuition (see below).

These explanation graphics 'teach' managers how their rules and forecasts translate into position sizes. We’ve created 'sandboxing' capabilities with interactive sensitivity so that managers can adjust their probabilities, estimates, multiples, conviction levels, etc., and see immediately the impact on the position size. In the future, we will provide 'sandboxing' simulations to experiment with how changes in assumptions change the performance of the fund.

"In these discretionary contexts, we’ve found that beyond helping humans make decisions about the portfolio at hand, optimizers through their output can also teach humans—including how to better design and interact with an optimizer itself, and, importantly, how to counter behavioral traps and cognitive biases when making investment decisions.

One type of lesson we’ve learned from working with optimizers relates to the behavior of optimizers themselves. For example, they sometimes produce outputs that are unexpected or counterintuitive. In these cases, there can be an immediate lesson about an optimizer’s design. But often there is also an embedded lesson that informs an investor’s own intuition.

Sometimes human intervention is justified, and that intervention can take many forms, such as adding a risk factor, changing limits or other parameters, updating an approach to calculating critical inputs, or even putting aside the optimizer altogether.

We believe situations like these should be approached with intellectual humility. Otherwise, the temptation to tinker might override many of an optimizer’s benefits we’ve described above."

I appreciate DE Shaw’s willingness to share their knowledge with others in the investing community. And, while written from an investor’s perspective, these lessons apply to any field where humans and machines collaborate in decision-making. I’ll leave it to the folks at DE Shaw to sum up:

"It may seem trite in this period of rampant proliferation of artificial intelligence, but we find that a collaborative relationship between human and machine can be powerful in harnessing the comparative advantages of each. We’ve seen how our traders and portfolio managers internalize lessons from such collaboration, becoming more precise in their communication of assumptions, more aware of their intellectual biases, and more attuned to the tradeoffs inherent to any decision.

As investors, we find these lessons especially powerful given the limits we face in what can actually be known, or reasonably predicted, in financial markets. Even the best human practitioners, working in concert with the most advanced optimizers, won’t be able to solve every problem in investing—but we’ll happily take that option over going it alone."

Let’s recognize that most firms do not have the resources or experience of D.E. Shaw. For those that don’t, Alpha Theory is a tool that allows fundamental managers to build their own unique position sizing optimizer.

.svg)

.png)